My experience with so-called “artificial intelligence” (AI) goes back to the late 1980s and my research into the application of neural networks in music. This included the design of a system able to learn a musical style and then generate a live accompaniment in the same style—all of which led to my 1996 MPhil thesis, imaginatively titled ‘The Design of a Computer-Assisted Composition System’.

Expose the system to enough Bach, for example, and the idea was it would respond to a suitable musical prompt with a novel Bach-like piece. It’s a similar approach to the large language model (LLM) used by ChatGPT to respond with text: consume vast amounts of someone else’s work to train the network and use it to generate “new” output when prompted.

My experiments last year with ChatGPT found it to be wildly contradictory, inaccurate and derivative—and that includes GPT4. Which exactly mirrors my experiences with AI and music those many decades ago.

More recently, I’ve been testing various AI text to image generation tools. The three tools I’ve experimented with so far are Bing’s creative search (with image creation powered by DALL-E from OpenAI), runway, and Midjourney (accessed via Discord).

I’ll briefly illustrate what each of these has produced in response to my text prompts. As you’ll see towards the end of this post, Midjourney I think is currently in a class of its own in terms of image quality.

Bing / DALL-E

DALL-E-powered Bing image creation is fun and reasonably fast. The results are highly variable, particularly people—who can possess unwanted limbs and distorted visual features. Here’s a less distorted one I created of a cave man cycling a bike in central London:

I wondered what a similar figure might look like settling down in his cave with a pint of beer. Well, here’s the answer to that:

As is well known—and which I’m sure ChatGPT can readily confirm—cats actually run the world (or at least social media). So here’s an image of the true original creators of the pyramids busy with their work:

As you can see, despite requesting a realistic, high definition photo, it looks more like an illustration.

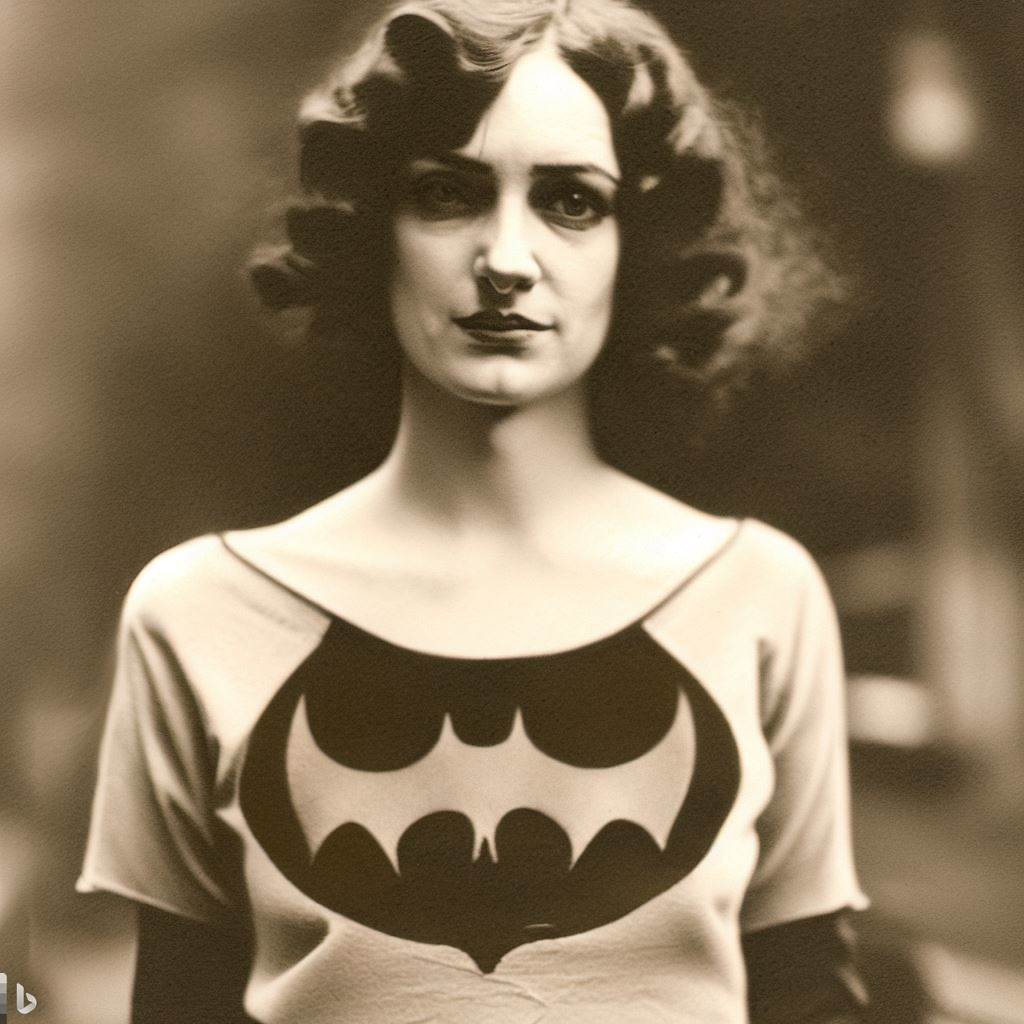

Okay, let’s change the subject entirely, and see how well the system copes when requested to create a recently rediscovered 1920s image of batgirl:

I suspect that’s good enough to fool some people that it really is an old photo, and not something created from a text prompt.

I also experimented with more abstract concepts. I wanted to discover whether I could describe some of my dreams and have them visualised. The results are interesting, if frequently derivative:

Enough abstraction. Let’s get back to attempts at realism and photographic quality. In this one, I described an image of a concert taking place in the grounds of a certain regal castle in Windsor:

I then turned my attention to space, describing an image I wanted for an article about comets:

That’s enough Bing / DALL-E for now—apart from this final one of my cave dweller enjoying a nightcap:

What about the costs? Well, so far I’ve been able to generate as many images as I like with no attempt to make me sign up to a subscription service. But that may be because I’m an Office 365 subscriber—or just got lucky.

runway

Next up is runway. I found it similar in quality and reliability to Bing / DALL-E.

Here’s an example of an image where I described a bat person illustrated in the style of Bruegel (although I didn’t specify which Bruegel):

And then I described an image of batman walking through Victorian London:

I also wanted to visualise a Roman centurion walking through today’s London, along with his chariot. The result looks more like something from a Terry Gilliam film:

This next one is a personal favourite, created in response to my description of a nervous giant fish trying to sneak undetected around the back streets:

And finally, here’s the Houses of Parliament in a goldfish bowl:

Runway is also significant in that it can generate video from text. However, access to this latest generation feature is limited and I haven’t been able to play with evaluate it yet.

Runway has various subscription plans, from an initial free one, to a Standard $12 per month to a Pro edition of $28 a month (if you choose to pay annually—otherwise the monthly fee is higher at $15 per month and £35 a month respectively). The more you pay, the more you can do—as you’d expect.

Midjourney

I’ve kept the best text to image generator to last. Midjourney is in a class of its own—my experiences suggest it’s a generation ahead of the others.

Let’s start with a still image taken from my imaginary new film about ginger cats attempting to seize power in London. During this revolution, they storm Buckingham Palace and other famous landmarks:

After the success of this feline revolution, there is, of course, a coronation and new queen in town.

Talking of cats, here’s one of a somewhat larger variety: a young leopard in the wild in Malawi (even if his paw magically goes through the tree trunk):

Next up, I described various images to illustrate a storybook I might get around to writing one day—all about an unemployed wizard and his cat:

In this one, my wizard appears a little confused about how best to make a cup of tea—and the cat is clearly embarrassed by his efforts:

And finally, back to another imaginary film. In this opening scene, a detective stands looking out over the city he loves:

Like runway, Midjourney lets you try it for free. Once you’ve burned through the initial credits, if you go for annual billing, a Basic plan will set you back $8 a month, a Standard Plan $24 a month, and the Pro Plan $48 a month. Choose to pay monthly, and those costs go to $10, £30 and $60, respectively.

What I learned

Testing these tools has been great fun. But they made me very conscious of long-standing issues about the uncredited creative works used to train the underlying systems—and the derivative nature of much of what they produce.

These issues of ownership and original creators’ rights are important. They need to be resolved in a way that respects creatives’ work rather than ripping them off. A decent percentage of the subscription charges need to go into a fund for onward distribution to the original creators—something like the way the ALCS collects ‘secondary use’ rights on behalf of authors.

I enjoyed using these tools. I’m interested in the feature I haven’t been able to access yet—generating video from text—particularly after seeing some of the runway text to video renders doing the rounds of social media. And there’s also the text to interactive 3D world tools:

As these tools carry on improving, I’m hoping they’ll help me visualise and share the often surreal imagery, unlikely plotlines, and half-remembered dreams long trapped inside my head. And I’m optimistic they’ll also help unleash a wave of untapped creativity in others, particularly as the costs come down to make the tools more accessible.

I’ll post a follow-up article once I’ve had the chance to access and test text to video and other related options. So you can expect a future post full of bizarre events, places, and creatures—oh, and the sounds/music to go with them too, of course. (You have been warned 😄).

p.s. There remain many related concerns about the societal and political impacts of the false images, videos, and audio that can now easily be created by such tools. These issues are outside the topic of this short piece, but discussed in more detail in my new book Fracture.

Thank you for your investigation and analysis. It was obviously something you really enjoyed. Especially enjoyed the dream interpretations and the possible future uses of AI generated pictures are endless. Less need for book illustrators? Think Moonpig should add the option to use this process in making personalised greeting cards!